The initial idea of so-called data lakes was to be able to process, transform and dig through huge data sets of unstructured, semi-structured and structured data. It was fairly simple. You put your data set on a Hadoop Distributed File System cluster, you wrote one or multiple MapReduce jobs, which parallelised the processing steps to retrieve the results you were aiming for. Nowadays, it’s not that simple any more, since the number of data bases, tools and frameworks, which are supposed to make this work easier, but also more secure, grew rapidly. This article is a side product of a talk I gave for a Cloudera Foundation project and depicts the history of Data Security and Data Governance. I start in Wild West like scenarios, in which data lake security was neither an option nor a requirement and nobody actually talked about data governance yet. In the course of this article we walk through different epochs to the present describing state-of-the-art capabilities of data lakes for companies to make as sure as possible that neither their data lakes are being breached, nor Personally Identifiable Information (PII) is leaked. The epochs I’m describing are just like the real Stone Age, Bronze Age, Iron Age,… – very dependent on the location, some regions are further developed, some regions are slower but may skip eras. Hence, the estimated time windows are only vaguely defined.

Data Lake Stone Age (5-15 Years Ago)

In the beginning, a lot of companies had one or a few huge data sets for mostly one or a few single use cases. The only way of securing this data was a firewall to block users from having access to the cluster. If you had network access to the cluster, you had access to the data on the cluster. Or in other words: there was nothing else that prevented users from accessing the data anonymously, but the firewall. For some companies or departments, especially smaller ones, this was acceptable, it was fairly easy to implement and it was based on mutual trust between the stakeholders, the data owners and users of the data platform.

Trust as a Security Measurement is Simply Not Enough

This approach was a good – and the only – way to kick off the project “data lake”. As the number of data sets grew on the data lake, the number of stakeholders grew and suddenly – oh surprise – the concept of mutual trust as a security measurement began to fail. Not only was it impossible that all users would know each other so well, as to trust each other, two more risks are always introduced, when multiple people work together: human failure, and conflict of interests. It soon became a requirement to build “secure” data lakes.

I remember being at a data conference and I listened to a talk about data infrastructure. They explained all the fancy stuff they were doing, emphasised multi-tenancy and high flexibility and scalability and a few other buzzwords. At the end of the talk there was a question from the audience: “How do you do all of this with ‘Security enabled’?“. The answer: “Well, we don’t have ‘Security enabled’ on our data platform.” That was 2016.

Bronze Age: What does “Secure” Actually Mean? (4-10 Years Ago)

The basic idea of securing a cluster is to grant access of certain persons to certain data sets and restrict access to others. Almost every database technology comes with one or more ways to define policies that enable the administrator to define who can access which data set within this database. This is known as authorisation. The issue here is that you still need a mechanism to prove to the database system who you are. This is known as authentication. Usually this involves a username and a password to match. This sounds simple, but working on scalable, distributed systems this causes complex challenges that have been solved in different ways, one of the most wide-spread and at the same time oldest mechanisms leveraged to implement authentication on data lakes is the Kerberos protocol.

Becoming Compliant was Possible – Not Easy

At that time, we could authenticate ourselves and access data that we were authorised to use. So, what else did we actually need? Especially in – but not limited to – the financial services industry, it was always a requirement to keep an access log, answering the questions of who was reading data or writing data and when that happened. Most database systems can deliver on that. In the meantime, the world of (open source) databases and data storage engines got quite complex and manifold. There’s a data storage system for each use case you could possibly think of: Do you need to archive raw data at a cheap rate and keep it available for processing later? Do you want to do simple, yet low-latency lookups of certain key words and retrieve information associated with these? Do you want to be able to full-text search documents? Do you want to do SQL queries that are not time critical or rather implement real time dashboards? All of those use cases require your data to be stored in different database systems, sometimes the same data is stored multiple times differently in multiple different database systems following the so-called polyglot persistence architecture. All of those different systems have a way of authentication, authorisation and audit and all of them work similarly, but are more or less different. And exactly this makes it insanely complicated to administrate: they are many different systems with their own implementation of “Security”.

One Service to Secure them All – Problem Solved!?

Retrospectively, the next possible development was as necessary, as it is now obvious. We needed services that could administrate authorisation and collect audit logs in a single point. The development of tools, such as Apache Ranger and Apache Sentry was initiated. It was suddenly easier and more scalable to manage role based security access policies across multiple database systems, also referred to as role based access control (RBAC).

Iron Age: Why Data Lakes are not Necessarily Like Wines (1-6 Years Ago)

While security was one problem that seemed to be fixed, companies wanted to answer more questions about their data – especially because the risk of losing data or data in a data lake becoming worthless was imminent, if they didn’t. These questions were:

- Where does my data come from? (Lineage)

- What are the processing steps of the data I’m using?

- Who owns the data I’m using?

- Who is using the data I own?

- What is the meaning of the data sets available in a data lake?

If you couldn’t answer these questions, while you were still on-boarding new data sources on a daily base and continuously granted data access to new people, you soon had a problem. You had a data swamp. [Play ominous music in the background while reading the last sentence].

A data swamp is a deteriorated and unmanaged data lake that is either inaccessible to its intended users or is providing little value.

Wikipedia

Data Lakes are less like wines, that become better with age, but more like relationships, that become better if you take care of them. [At least that’s what people who have friends tell me].

The Toolset is Expanded and New Roles Emerge

Similar to the Iron Age which is marked as the time when humans started to create superior tools made out of iron, the Iron Age for data lakes starts in your company, when you can answer the questions above efficiently, correctly and in a scalable way. Much as in the Iron Age, this requires appropriate tooling that hasn’t been there before. One of those tools emerging in the Open Source world was Apache Atlas. It started rudimentarily, but grew rapidly with the companies’ requirements. In the beginning you could tag data stored in a few data storage systems and display their linage. Later, the number of supported systems grew due to open standards and the meta data categories you could assign were expanded. A demand for a new role emerged, the data steward, a person, who is responsible to make sure meta data questions around a data lake can be answered at all times. Unfortunately, the name of the role sounds as boring as the role is important.

Next Level: Producing Steel in the Iron Age

After reading through a few of the previous paragraphs, I think you get the hang of it: The number of data sets grows again, the number of users grows again and as a result new problems emerge: we reached a point now, where it is simply painful to manage security policies and at the same time keep track of them. At this point there might be hundreds of policies per database, each policy matching a certain role/group with a certain data resource. This new challenge required new capabilities of a modern data platform and similar to the Habsburg success strategy “Tu felix Austria nube”, security and tagging capabilities were married. Henceforth, it was possible to create tag based policies, and thereby reducing the number of security policies by orders of magnitude.

One More Problem to Solve

An issue that hasn’t been discussed specifically in this article yet, but should be mentioned: encryption. There’s two types of encryption:

- “Wire Encryption”: SSL/TLS encryption we face every day in our browser windows, when we visit a website with the prefix https – as opposed to http – “s for secure”. This is called wire encryption, encrypting the communication between two servers and I’m not going to explain here why this is important. This was done multiple time on the internet, e.g., here.

- “Encryption at rest” describes data persisted in any storage system, e.g. a local hard drive, a distributed file system or any database system. Especially in times where you might not take care of your own infrastructure (data center, cloud vendor,….), encryption at rest makes sure, that those who administrate the infrastructure and possibly assign policies cannot actually use the data. The encryption key stays with the designated user or is managed on separate Key Management Servers (KMS) to guarantee that only those who are allowed to use the data (beyond policy assignment) can see the data.

Medieval Times: How to Deal with Regulations and External Policies (0-3 Years Ago)

One might ask themselves, why I would compare the time of regulations and governmental policies with the medieval times, often known as the dark ages. One good analogy is that, we have most of the required tools available from earlier times, but we are just not using them. And this is were the analogy ends already: the reasons of not leveraging technology in the medieval times were very different ones…

Let’s recapitulate what we have so far:

- Growing number of data sets

- Growing number of users

- Polyglot persistence

- A set of tools to address security challenges

- A set of tools to address governance challenges

On top of this, new regulations such as the General Data Protection Regulation (GDPR) pose new challenges. An overly brief and overly simplified summary of what GDPR means for a data lake can be found below:

- We need the consent to the processing of people’s personal data.

- We need to fulfill contractual obligations with a data subject, i.e.,

- provide information to the data subject in a concise, transparent, intelligible and easily accessible form,

- delete any data subject related data on request.

- We need to protect the vital interests of a data subject or another individual through

- pseudonymisation or tokenisation,

- keeping records of processing activities,

- and securing of personal data.

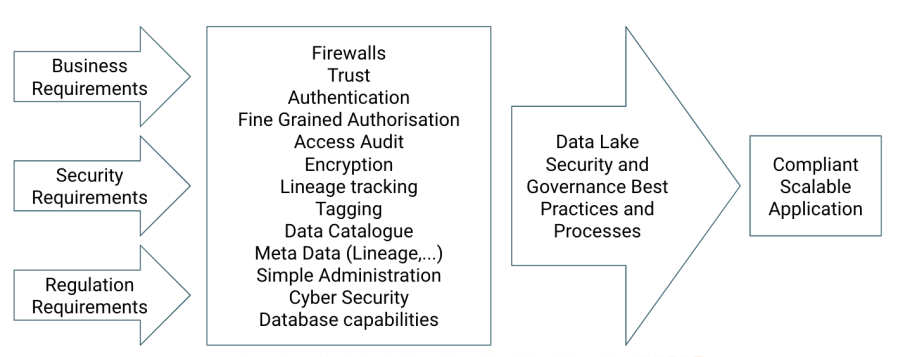

A solution to this is, on the one hand following best practices as well as establishing processes on the data lake using the existing tools. On the other hand, the solution is very individual. Similar to designing a data application based on certain business requirements, we need to make security and privacy considerations specific for this use case part of these requirements. Example: if a certain data set contains PII that could possibly be presented to the outside, we have multiple options. For example, we could use a storage engine that supports tokenisation of certain fields of PII. If the storage we need to use to deliver our use case requirements does not support tokenisation, then we would need to make sure to tokenise, anonymise or encrypt those fields at the time of data ingestion. If,… – I hope you get the idea. It’s important to look closely into your requirements and then carefully architect a solution based on the capabilities of the data platform and the processes you put in place.

Best Practices to Become and Stay Compliant

Above mentioned best practices and processes can be summarised as:

- Establish processes to manage

- consent,

- transparency and intended usage,

- automatic processing of personal data.

- Leverage dynamic masking and access control: use roles, tags, location and time to restrict access.

- Use the tools and its capabilities mentioned in this article efficiently.

- Become a user and customer-centric organisation: Design your applications with your customers as your most important asset. This makes it easier for you to manage and delete customer related data (and to make your customers happy as a side effect).

Renaissance: No System is 100% Secure (0-2 Years Ago)

This article focused on how to prevent data breaches and make it as difficult as possible for people with malicious intent to get access to data they shouldn’t have. However, what can go wrong will go wrong and even if we try our best, we are still human beings. We all do mistakes and since (personal) data is highly valuable, which is the main reason we take so many different measurements in the first place, there will always be people who want to get this data to use and abuse it. There’s no system yet to protect us from social engineering and data breaches happen on a regular base. In addition to that, the “Internet of Things” (IoT) adds a higher attack surface (= number of possibilities to enter a system without permission) than ever before.

Therefore, it’s mandatory to work closely with our cyber security colleagues to be able to detect breaches and respond to them as soon as possible as well as to have a good backup and disaster recovery plan. Modern data lakes are commonly built using the very same technology that powers their business use cases to also power their cyber security and threat hunting efforts.

I’ve worked quite a bit with Apache Metron, a cyber security platform running on a data lake. and written quite a bit about it on this blog.

The Future: New Regulations and Governmental Policies and Technology

The number of governmental regulations will grow in the future and they will be very specific to specific countries. Some kind of data is not allowed to leave certain countries. Some kind of data will always be inspected by certain governments, when it leaves the country. New and additional data privacy and governance regulations will be published as the existing ones are being tested in the wild. More requirements always means more complexity. This shouldn’t worry you, since you know your data platform and it’s databases, it’s security capabilities, as well as it’s data governance capabilities. Furthermore, you have well educated data architects and engineers who not only know how to translate business requirements into a data architecture, but also security requirements and governance requirements of internal and external regulations and policies into the same data architecture.

I’m also pretty sure, that there will be regulations that will bring challenges that will be difficult to overcome – if not impossible at that time. The beautiful thing is that all companies (that are affected by this regulation) will face this challenge and they might find their specific ways to overcome those challenges, or – and that’s what happened multiple times in the past – companies work together on open source software to overcome those challenges together.

I Barely Used the Word “Cloud” in this Article! What’s Wrong with me?

Cloud is just one (major) option to store and process the data and provides challenges as well as opportunities. Your cloud provider of choice might not have a data center in the country you produce the data (= challenge). You might not have a data center in the country you produce the data, but the cloud provider has (= opportunity). Basically, treat cloud as part of your tool set to solve challenges and use it as you would use every tool, knowing that it has advantages and drawbacks.

Outlook

This article described roughly the history of security and data governance of data lakes (as far as you can put those items on a strict timeline). Each of those historic additions to the data lake security and governance ecosystem are essential building blocks and tools and all of them are still as relevant as at the time of their introduction. It’s up to you to put them to use and leverage all of their capabilities to make your life easier and your data more secure, manageable and compliant.

It is a good article. This site has lots of interesting things, it made it easier for me in many ways.

LikeLiked by 1 person

Thanks Daniel! 🙂

LikeLike

Thanks for helping people find the information they need. Great stuff as always. Keep up the great work!!!

LikeLiked by 1 person

Thanks Stephen! 🙂

LikeLike